Using AI to build design systems w/ Figma MCP

TJ Pietre on the future of design systems, using Figma MCP and Contextual Engineering

Jasmine KaurStrategic AI Designer @Georgia Tech

TJ Pitre is a front-end engineer and design systems strategist with over 20 years of experience helping product teams ship faster and more consistently. As the founder and CEO of Southleft, he’s built a consultancy trusted by teams at NASDAQ, Caterpillar, Indeed, and IBM—known for its strong culture of creativity, truth, and craftsmanship.

In this episode of our podcast How I Vibe Design, TJ dives into how AI is reshaping the way we design, prototype, and build. He shares his process for blending atomic design principles with AI-assisted workflows using tools like Figma MCP, Cursor, and his own creations—FigmaLint, Story UI, and Design Systems Assistant MCP. This article highlights our biggest takeaways from that conversation.

What is the difference between vibe coding and contextual engineering?

When I don’t know much about something and want to workshop the idea while building it, I call that solo activity a vibe coding session. The purpose is to create something quickly—driven by intuition and everyday language.

Contextual engineering, on the other hand, starts with clear requirements and specifications before any code is written. It aims for efficiency, precision, and scalability in large applications. It requires thinking like a product owner—writing user stories and defining technical architecture. In my workflow, I use tools like Claude Code to refine and validate specs before generating code.

Prompts in a vibe coding session start with feeling-based phrases like “I feel like it should do this.” The vocabulary leans on emotional or conceptual cues rather than technical details. As I explore the idea, the AI gains context from the conversation. However, the build can fail if expectations exceed the AI’s understanding.

When I’m building with AI, I shift from “building by vibe” to engineering with intent. I ensure the AI knows why a component exists—not just what it looks like. That involves multiple roles—product owner, architect, and developer—and structured documentation including statements like “As a user, I can…”.

The result? Contextual engineering significantly reduces design–development gaps, saves time and cost, and produces cleaner, more consistent code.

What does contextual engineering for a design system component look like in action?

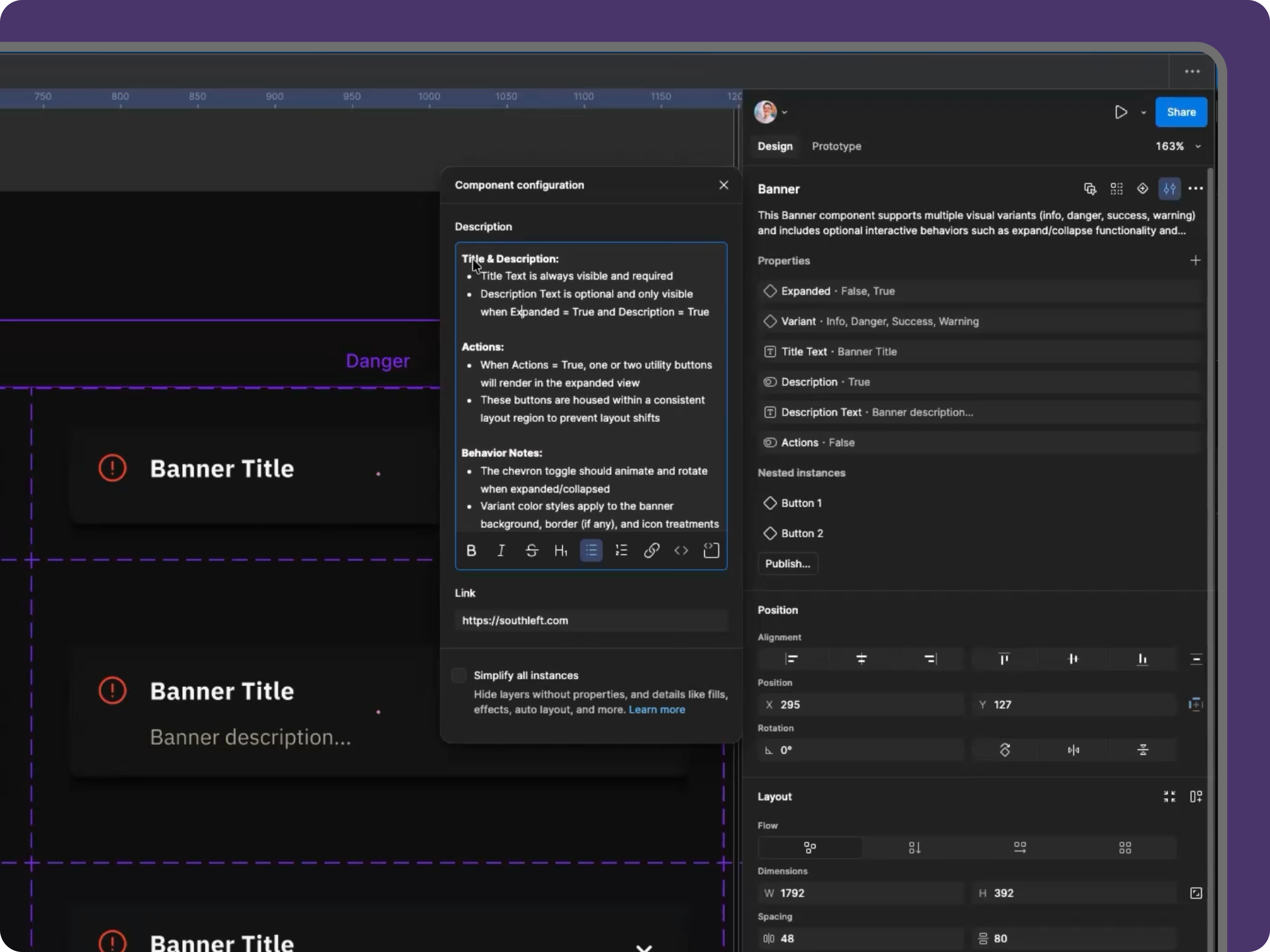

My process begins in Figma, where I build components with detailed properties, tokens, and descriptions explaining their purpose and intent.

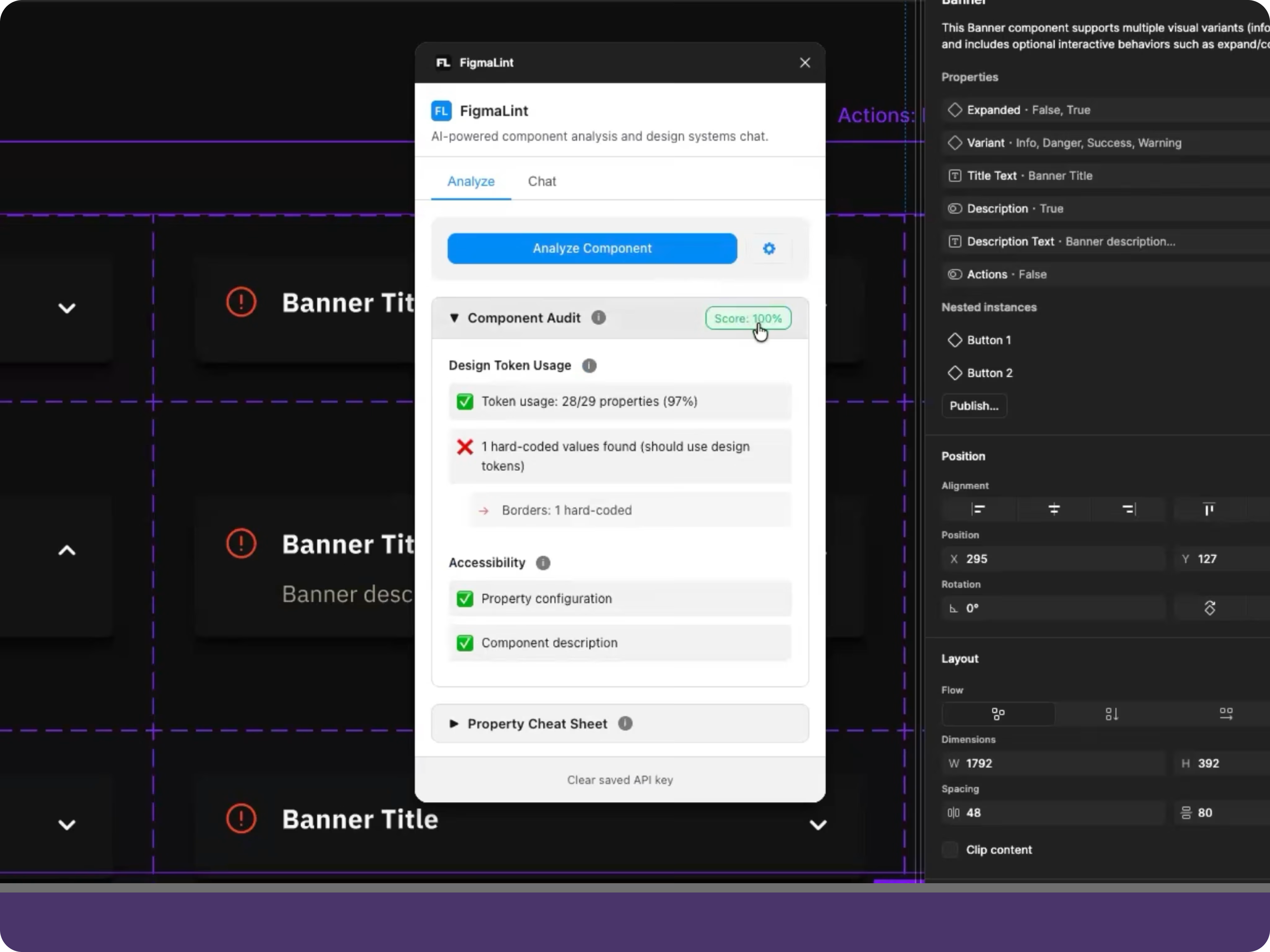

I then run FigmaLint to ensure everything scores above 90, and copy the selection link for the component.

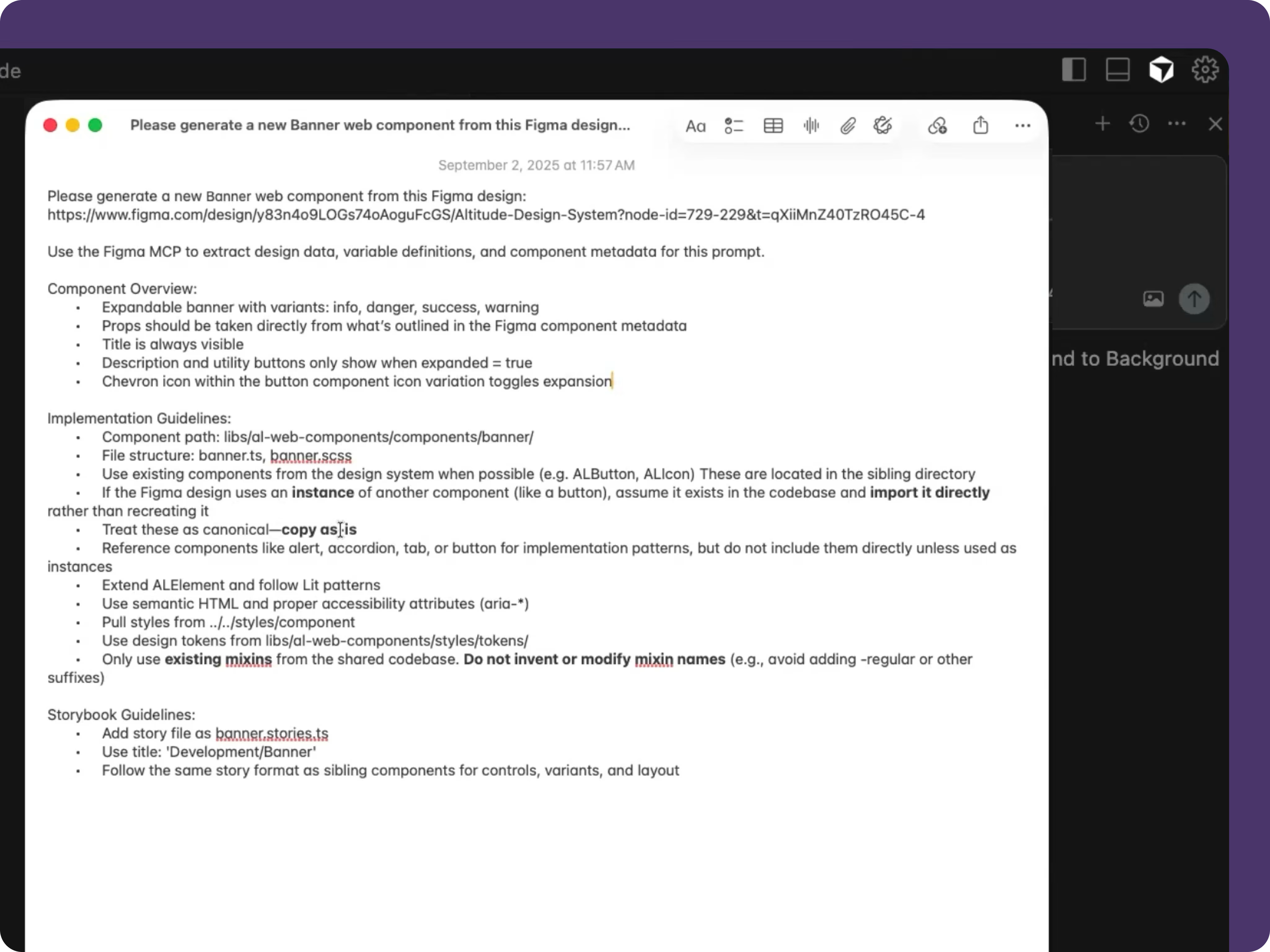

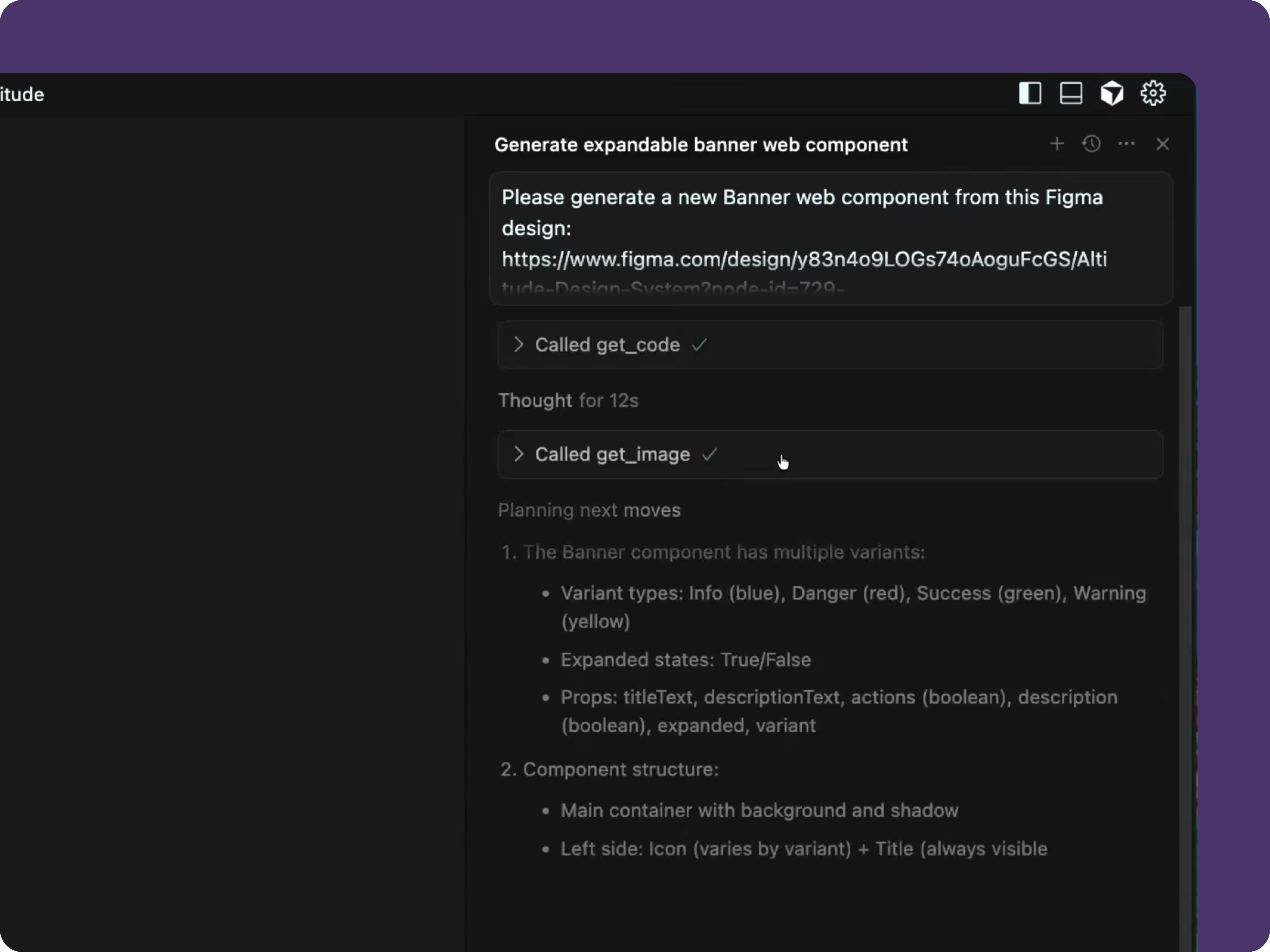

Next, I switch to Cursor, paste the link into a prompt template, and instruct the AI to use the Figma MCP to extract the design.

MCP pulls a JSON payload describing the component’s structure, styles, and tokens. The AI then generates TypeScript, CSS modules, and Storybook stories—organized into the right directories.

As code generates, I monitor it live to see how AI parses typography, tokens, and images from Figma. Once done, I review the results in Storybook, which are typically 80–85% complete. I inspect spacing, icons, and states, then use prompts or minor manual edits to polish details.

The entire process converts what once took two to three days into a 10-minute workflow, blending automation with human oversight.

How does atomic design come into play in the world of quick prototyping with AI tools?

Atomic design provides a structural foundation when working with AI tools like Figma MCP and Claude Code. By breaking components into atoms, molecules, and organisms, teams can manage complexity systematically.

For example, a banner component is not an atom (too complex) and not quite an organism (too small). It’s a middle layer that illustrates how AI can handle reusable, layered structures. Focusing AI on generating small, well-defined elements—rather than entire products—helps teams prototype faster and maintain clean, reusable code.

Most design systems are roughly 60% components, so this approach boosts efficiency, minimizes debugging, and enables rapid iteration—without sacrificing structure.

How has the role of Figma changed in building design systems?

Figma components now include rich properties, tokens, and natural-language descriptions that communicate both purpose and intent. This helps Figma MCP understand not only what an element is—but why it exists, enabling smarter code generation.

I run FigmaLint on each component before handoff, aiming for a 90+ score to surface hardcoded values or missing metadata early—reducing downstream friction.

Pro tips:

- Clear properties and metadata reduce developer guesswork.

- Run sub-agents to catch duplicates and enforce component reuse.

- Document intent and maintain clean taxonomy to support long-term scalability.

Earlier bridges included tools like Style Dictionary, Generator Lab YAML flow, and Figma Bridge—precursors to today’s MCP workflows. Each reinforced Figma’s evolving role as the upstream source of truth for design and code.

Today, a component that once took 1–3 days manually now reaches 80–85% completeness in about 10 minutes—with Figma serving as the AI-readable blueprint.

What will contextual engineering look like a year from now?

The next frontier is refinement. Soon, AI will require far less detailed prompting to produce high-quality results. Where prompts once needed exact instructions about file structures and behaviors, future models will infer those details from context.

Early models required explicit instructions. But newer ones, like Opus 4.1 and beyond, can interpret architecture and design intent automatically—enabling faster, smoother builds.

Ultimately, contextual engineering will evolve into a more autonomous, seamless process where AI deeply understands context, allowing humans to focus on intent, quality, and creative direction.

What tools have surprised you in bridging the gap between design and code?

- Style Dictionary: The first real bridge, translating design tokens (colors, typography) into CSS variables from Figma’s API.

- Generator Lab: YAML-based tool for defining and generating components—an early step toward machine-readable structure.

- Figma Bridge (VS Code Extension): Used personal access tokens to extract component JSONs and feed them into AI-ready environments.

- FrameLink: A third-party MCP-like tool that predated the official version, providing a bridge between Figma and AI workflows.

- Story UI: A custom-built tool that tested AI-generated components in live templates and grids, surfacing integration issues early.

- Sub-agent Checks: Automated tools that flag duplicate components and encourage reuse, reinforcing consistency across builds.

How do you decide when AI’s code is “good enough” to move forward?

At Southleft, nothing ships without human review. Even though AI accelerates the process, each generated component is manually verified.

We monitor generation live, observe how MCP builds files, and review the result in Storybook. From there, details like spacing, padding, and icons are refined through prompts or manual edits. The AI may handle 80–85% of the work—but craftsmanship still requires human oversight.

What is the first step to get started with AI-assisted building?

Start with context, not code. Define requirements, user stories, and architecture before prompting. Once you know what and why—AI can handle the how.

RELATED INSIGHTS

Why Chronicle's chat-first UX has a bigger play

A glimpse into Chronicle's new AI interface and how it repositions their product with the users.

Intelligence today feels a lot like the internet in it's early days

It's still something you have to go to, rather than something that meets you where you are.

Using v0 to fast track stakeholder alignment

How I designed with an AI partner, what worked, where it broke, and what I learned

Insights in your inbox, monthly

Stay ahead of the curve

for designers and product teams in the new AI paradigm.

Loved by 2,700+ designers